Data Science at Flurry

Author: Soups Ranjan, PhD

Flurry, being an integral part of the mobile app ecosystem, has very interesting data sets and consequently highly challenging problems. Flurry has insights into the 370K apps that have the Flurry Analytics SDK installed across the 1.1 billion unique mobile devices that we see per month. While there are corollaries to the web world, there are quite a few data science problems at Flurry that are just brand new and not necessarily derivative of the analogous web world problem.

The most challenging problems that the data science team at Flurry deals with are estimating user characteristics (e.g., age, gender, and interests of app users) and predicting responses to advertising (and therefore which ads should be served to which people).

In this post, I’ll describe our approach towards solving one of the data science problems in-depth: the ad conversion estimation problem. Before we serve an ad to a user inside an app, we’d like to know the probability that the user will click on that ad. Given that we have historical information on which ads were clicked on, we can use that as our training sample and train a supervised learning algorithm such as Logistic Regression, Support Vector Machines, Decision Trees, Random Forests, etc.

Problem Definition: We treat this as a binary classification problem where, given a set of ad impressions and outcomes (whether the impression resulted in a conversion or not), we train a binary classifier. An impression contains details across three dimensions: user, app, and ad. Users in the Flurry system correspond to a particular device as opposed to a particular person. User features include details about the device (such as OS version), device model type, and prior engagement with mobile apps and ads. App features include details about the app, such as its category, average Daily Active Users, etc. Ad features capture details such as the advertiser’s bid price, ad format (e.g., whether it is a banner ad or interstitial), and ad type (e.g., whether it is a CPC [Cost-Per Click], CPV [Cost-Per-Video view] or CPI [Cost-Per-Install] ad). We also use time-specific features such as hour of day (in local time), day of week, and day of the month, as well as location-specific features such as country or city, as all of these have an important bearing on the probability of conversion.

More specifically, we use Logistic Regression to solve this problem. Logistic Regression is used for predicting the probability of an event. It predicts by fitting the data to a logistic curve. Consider an ad impression Y which has the features defined in a set X= {x1,x2,…, xn}. We define a logistic function f(z)= P(Y=1|X=x). Note that f(z) is 1 when impression Y converts and it is 0 when it doesn’t convert. We define f(z) = 1/ (1 + exp(-z))where z is a logit and is given as:

where, β0 is called the intercept and βi are the regression coefficients associated with each xi feature. Each regression coefficient describes the size of the contribution of that particular feature. A positive regression coefficient means that the particular xi feature contributes to probability of outcome of 1, indicating the feature has higher predictive capability in classifying an impression as a conversion. Similarly, a negative regression coefficient means that the particular xi feature contributes more to probability of outcome of 0, indicating the feature is has higher predictive capability in classifying an impression as a non-conversion.

Data deluge or how much data is enough? One of the most important design criteria is the number of ad impressions we use to train a model. Our experience with this is that after a while the marginal gains in the model’s performance using larger data sets is not worth the higher costs associated with using larger data samples. Hence, we use MapReduce jobs to pull data from our HBase tables and prepare a training set that consists of tens of millions of ad impressions, that is then fed into a single high-performance machine where we train our models. Our feature space consists of tens of thousands of features, and as you can imagine, our data is highly sparse with only some features taking values for an impression.

Interpretable vs. black-box models: Recently, black-box techniques such as Random Forests have become highly popular among data scientists; however, our experience has been that simple models, such as Logistic Regression, achieve similar performance. Our usual approach is to first test a variety of models. If the performance differences are not significant, then we prefer simpler models, such as Logistic Regression, for their ease of interpretability.

Offline batch vs. Online learning: At one end of the spectrum, offline learning algorithms can learn a sophisticated model via potentially multiple passes over the data (also, referred to as batch algorithms) where the learning time is not a constraint. At the other end of the spectrum, there are online learning algorithms (such as the very popular tool, Vowpal Wabbit), where we attempt to train a model in near real-time by making one pass on the data at the cost of giving away some of the accuracy. With this approach, we use the model as developed so far to score the current ad impression and then use this same impression to recompute the model’s parameters. The memory requirements are much lower as we iteratively learn new weights while considering the current impression, so we can play with a much larger feature space. We can then explore non-linear feature spaces by considering polynomials of our regular features, e.g., (avgDAU2,.., avgDAUn) as well as to capture the cross-correlation (or interaction) between features, e.g., avgDAU * hourOfDay, which intuitively captures information about number of active users in this app at a particular hour of the day.

Time to score a model: A highly important consideration is the ability to score an impression against the model within tens of milliseconds to estimate its conversion probability. The primary reason is that we don’t want to make a user who’s waiting to see an ad (consequently, waiting to get back to their app after an ad) wait for a long time, leading to a poor user experience. Given this consideration, several models that would otherwise be well-suited to this problem simply don’t qualify because of this constraint. For instance, Random Forests don’t work in our case because in order to score an impression we would have to evaluate it against tens, or even hundreds, of Decision Trees, each of which may take awhile to evaluate the impression feature-by-feature down a tree. Granted, one could parallelize the scoring such that one impression gets scored via one Decision Tree on one machine core; however, in our experimental evaluations, we didn’t see any noticeable gains from Random Forests to even justify going down this route.

Unbalanced data: Another interesting quirk of our problem space is that conversions are a highly unlikely event. For instance, for CPI ad campaigns, we might see a few app installs per thousand impressions. Hence, the training set is highly unbalanced with many, many more non-converting impressions and very few converted impressions. So a simple, yet incorrect, model may perform quite well by always predicting every impression as non-converting, but that would be self-defeating since we are actually interested in predicting which impressions lead to conversions. To avoid this, we assign a higher weight to converting impressions and a lower weight to the non-converting ones so that we can still get an adequate performance while predicting conversions. We learn the weights via cross-validation, while setting the weights in different ratios during different cross-validation experiments, and then selecting the parameter that maximizes our performance.

Overfitting and Regularization: One of the advantages of Logistic Regression is that it allows us to determine which features are more important than others. To achieve this, we use the Elastic Net methodology developed by Tibshirani et al in by incorporating it within Logistic Regression. Elastic Net allows a trade-off between L1- and L2- regularization (explained below) and allows us to obtain a model with lower test prediction errors than the non-regularized logistic regression since some variables can be adaptively shrunk towards lower values as shown in the equation below. The formulation below can be interpreted as follows. During a training phase and for given hyper parameters, λ and 0 ≤ α ≤ 1, we find the coefficients β which minimizes the argument in Equation 1. When α = 1(0), this reduces to L1-regularized (L2-regularized) logistic regression. When there are multiple features that are correlated with each other, then L1-regularization randomly selects one of them while forcing the coefficients for others to zero. This has the advantage that we can remove the noisy features and select the ones that matter more; however, in the case of correlated features, we run the risk of removing equally important ones. In contrast, L2-regularization never forces any coefficient to zero, and in the presence of multiple correlated features, it would select all of them while assigning each of them equal weights. Varying the hyper parameter α at values other than 0 or 1, hence allows us to keep the desirable characteristics of both L1- and L2-regularization.

Performance: To measure the performance of our machine-learning models, we compute Precision and Recall, defined as follows:

-

Precision = Impressions correctly predicted as conversions / Total predicted conversions

-

Recall = Impressions correctly predicted as conversions / Actual conversions

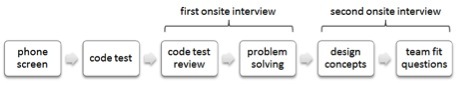

Fig. 1: Precision and Recall for different models

Figure 1 above, shows the performance of various models when applied to a hold-out test data set. The plot shows precision and recall values, and in general a model that captures more Area-Under-the-Curve (AUC) is better. So, the L1-regularized Logistic Regression with λ = 0.001 performs the best, followed by almost similar performance by λ = 0.01 and an Online learning model built using Vowpal Wabbit. The most interesting insights here are that a model built with a smaller λ = 0.001 value performs the best as it forces more shrinkage in the feature set, leading to many more irrelevant features forced to have weights of 0, and hence this model avoids overfitting leading to higher performance in test data set. The other highly interesting insight is how an online learning model has very comparable performance to the batch learnt one.

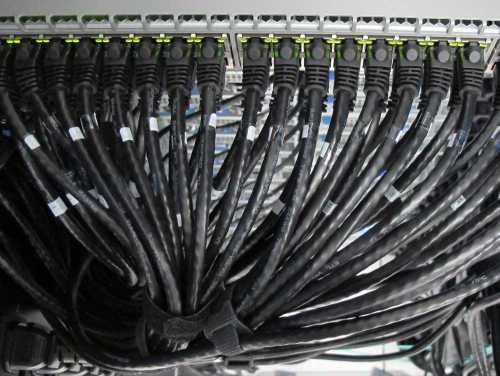

Fig. 2: Regression weights for localHourOfDay

Next, we take a look at the interpretability of Logistic Regression in greater details. For instance, Figure 2 shows the coefficients assigned to different hours of the day. Note that hours of 5 pm, 7 pm and 8 pm have a positive coefficient indicating that higher values in these features indicates an increase in probability for the impression to be classified as converted which matches our intuition since evening time is prime time to show ads. For instance, localHourOfDay of 5 pm has a coefficient of 0.16 which means that exp(0.16) = 1.1735, i.e., the odds p/(1-p) of classifying an impression as converted vs. not converted at 5 pm increases by 17.35%. In contrast, the localHourOfDay of 1 am, is assigned a negative coefficient, which once again matches our intuition in that higher values for this feature indicate a lower chance for an impression to be classified as converted.

Conclusion: Hope you enjoyed reading our introductory blog post on Data Science at Flurry. If you have any feedback or would like to learn more about any particular content mentioned here, please do leave a comment below. And above all, stay tuned for more insightful articles from the Data Science team!